In 1950, Alan Turing posed a question that would shape the future of artificial intelligence: Can machines think? His answer was the now-famous Turing Test—a way to judge machine intelligence by its ability to mimic human conversation. While revolutionary for its time, the test now feels outdated.

Today’s AI systems generate human-like responses with ease, but does that mean they truly understand? The line between performance and intelligence has blurred. In a world of neural networks and large language models, it's time to look beyond imitation and rethink how we evaluate what it really means for a machine to "think."

The Turing Test is often misunderstood. It doesn’t test whether a machine can think in the human sense. It checks whether a machine can simulate human-like responses well enough to fool someone. There’s a big gap between performing a convincing act and possessing real understanding.

The power of the Turing Test is its simplicity. It avoids getting into profound philosophical discussions by looking at only behavior. If a machine can simulate human conversation, it passes. The test does not ask how answers are derived—only whether they're good. It prizes performance over process, so it is a tidy, if limited, means of assessing artificial intelligence.

But that's also the issue. Some systems get through the Turing Test by cheating. They sidestep direct answers, deflect questions, or simulate vague emotional speech — strategies that don't indicate profound understanding. This performance-based framework can pay off for superficial mimicry, not real intelligence. That's why most researchers now consider the Turing Test a beginning, not an end.

AI has come so far from what Turing envisioned. We're not merely talking to robots—we're assigning tasks, cracking problems, and creating content with sophisticated neural networks. These programs process data in a manner that humans never could. Yet, they still can't perceive like we do. They lack beliefs, emotions, and awareness—only patterns and odds.

The main keyword — The Turing Test — doesn’t capture this complexity. It’s like judging a book by how well it matches your handwriting instead of what it says. Evaluation needs to go deeper. Researchers now look at how models reason, whether they can generalize to new problems, and if they show any sign of common sense — even in narrow domains.

This is where modern benchmarks come in. Instead of asking, "Can it fool me?" evaluators ask:

These are harder challenges. They reveal what the Turing Test hides—that imitation isn't the same as intelligence.

Turing’s original test focused solely on text-based interaction, ignoring the complexity of real-world intelligence. Today’s AI navigates images, sound, and movement—far beyond what the test measures. It captures only a narrow slice of capability in a controlled setting, falling short in an age where AI operates in dynamic environments like homes, hospitals, and everyday devices.

Researchers now use a wide mix of evaluation tools, many of which don’t try to measure "humanness" at all. Instead, they focus on measurable performance across tasks. How accurate is the output? How consistent? How safe?

One major shift has been toward task-based benchmarks. These datasets present AI with a variety of challenges, from language translation and image recognition to logical puzzles and programming. The goal isn't to mimic humans but to complete tasks efficiently and correctly, which makes the results easier to score and compare.

Another technique is interpretability testing. How transparent is the model's decision-making? If it gives a wrong answer, can we tell why? A black box model might be powerful, but it's hard to trust. Researchers now care just as much about explainability as output quality.

Ethical evaluation is also growing. It's not just about what AI can do—it's about what it should do. Does the system reinforce bias? Can it be tricked into producing dangerous content? These concerns weren't part of the original Turing Test, but they're vital now.

Importantly, many of these tests are open-ended. There's no fixed bar to clear, like "fooling a judge." It’s a continuous process of refining performance, adapting to new standards, and identifying weaknesses. AI evaluation has become a living ecosystem — not a single finish line.

While the secondary keyword—AI evaluation—pops up often in these circles, it's less about a specific test and more about an entire mindset shift. We're learning that intelligence is layered. It's not binary. It grows in fragments—reasoning, memory, creativity, safety—and each layer needs its method of evaluation.

The Turing Test still holds symbolic value. It reminds us that how a system behaves can matter when judging intelligence. But it’s no longer the benchmark we rely on to track AI progress. That role now belongs to broader, more demanding forms of AI evaluation.

Its simplicity once made it powerful. But that same elegance may have narrowed our thinking, focusing too much on imitation and not enough on deeper understanding. Modern AI needs more than a chat test.

Today, we look at how AI reasons, adapts and explains itself. One single test won't work anymore. Instead, we need layered evaluations—covering safety, transparency, ethics, and performance across real tasks.

So, the question is shifting. It's no longer "Can machines think?" Now it's "Can we trust how they think?" The Turing Test still matters—just not in the way it used to.

The Turing Test sparked a vital conversation about machine intelligence, but today's AI demands more. While it still holds symbolic value, it's no longer enough to simply mimic human conversation. Modern AI evaluation must dig deeper—testing reasoning, transparency, and ethical behavior. Intelligence is now seen as layered and complex, requiring a range of tools to measure it properly. As AI continues to evolve, our methods of assessing it must evolve, too—with trust, fairness, and real understanding at the center.

To decide which of the shelf and custom-built machine learning models best fit your company, weigh their advantages and drawbacks

Know how to produce synthetic data for deep learning, conserve resources, and improve model accuracy by applying many methods

Learn how to lock Excel cells, protect formulas, and control access to ensure your data stays accurate and secure.

Uncover how The Turing Test shaped our understanding of artificial intelligence and why modern AI evaluation methods now demand deeper, task-driven insights

Generate your OpenAI API key, add credits, and unlock access to powerful AI tools for your apps and projects today.

How to visualize proteins using interactive, AI-powered tools on Hugging Face Spaces. Learn how protein structure prediction and web-based visualization make research and education more accessible

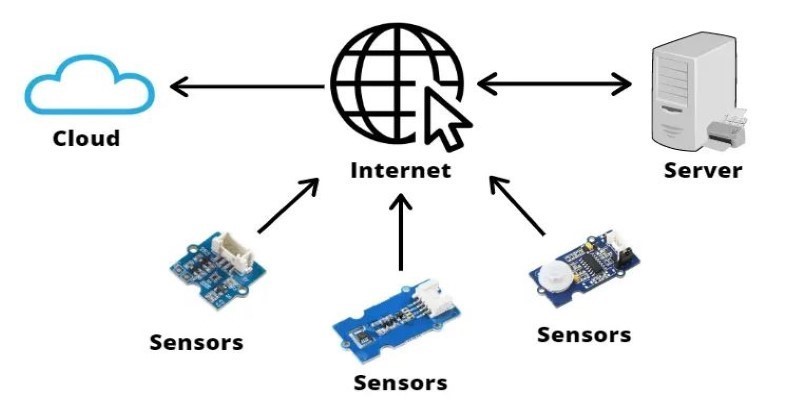

How Edge AI is transforming technology by running AI on local devices, enabling faster processing, better privacy, and smart performance without relying on the cloud

Know the pros and cons of using JavaScript for machine learning, including key tools, benefits, and when it can work best

Create profoundly relevant, highly engaging material using AI and psychographics that drives outcomes and increases participation

Fastai provides strong tools, simple programming, and an interesting community to empower everyone to access deep learning

How the FILM model uses a scale-agnostic neural network to create high-quality slow-motion videos from ordinary footage. Learn how it works, its benefits, and its real-world applications

Explore the role of probability in AI and how it enables intelligent decision-making in uncertain environments. Learn how probabilistic models drive core AI functions