Artificial intelligence makes decisions with a kind of silent precision, and at the heart of that precision is probability. It’s not about rigid rules or pure logic—it’s about handling uncertainty in real-time. Whether an AI is recognizing speech, recommending a movie, or navigating a road, it's constantly estimating the most likely outcomes.

Probability allows AI to function in an unpredictable world, ranking options, refining guesses, and adapting on the fly. It’s not a minor tool—it’s the core mechanism. Without it, AI would falter. Probability doesn’t just support AI—it defines how it works, learns, and makes sense of complexity.

AI operates in unpredictable, messy environments. Unlike a calculator, which runs on clear inputs, AI must interpret vague signals, incomplete data, and changing patterns. Probability helps make sense of all that. It gives AI systems a means to quantify how probable something is to be true even when there is no complete information.

Imagine a voice assistant trying to understand a command in a noisy room. It doesn’t “hear” every word clearly—it uses a probabilistic model to guess what was said based on prior patterns and context. That’s how it can still respond accurately even if some of the input is unclear.

In medical diagnosis tools, AI doesn't offer one final answer. It provides a probability for each possible condition based on symptoms and test results. This approach mirrors how humans think under uncertainty but with far more consistency and speed. Instead of getting stuck when data is unclear, AI calculates which options are most likely—and acts accordingly.

This shift from deterministic rules to probabilistic reasoning is what allows AI to work in the real world, where nothing is ever 100% certain.

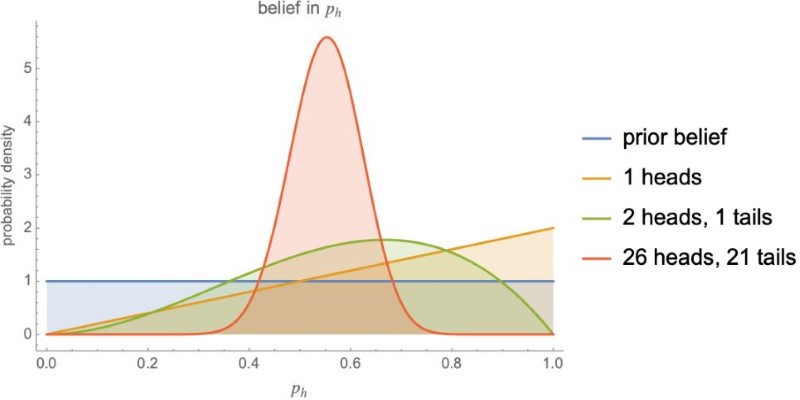

One powerful tool in AI's probabilistic toolkit is Bayesian inference. It helps AI systems refine their predictions as they get more data. The idea is simple: start with an assumption (called a prior), collect new information, and then update that assumption (called a posterior). This constant updating is crucial for AI that learns on the fly.

Take image recognition. An AI may start with a weak guess that an object is a dog. However, as it processes features like fur texture or ear shape, it updates the probability. By the end, it might be 91% sure—and that's usually good enough to act on.

This method is particularly useful when data is noisy or limited. In financial forecasting or weather prediction, systems use Bayesian models to revise expectations every time new numbers come in. The system doesn't throw away old information—it just adjusts it.

The beauty of this approach lies in its adaptability. Unlike hard-coded rules, probabilistic models adjust naturally with each experience, helping AI remain accurate over time without requiring full retraining.

Probabilistic models drive many core AI functions. These include simple tools like Naive Bayes classifiers and complex structures like Bayesian networks or Markov models. Each of these systems turns data into predictions based on probability theory.

In spam detection, Naive Bayes calculates the probability that a message is spam based on keywords and behavior. While basic, this model is highly effective and incredibly fast. It doesn't need to understand language—just likelihoods.

More advanced models, like Bayesian networks, can represent dependencies between variables. For example, in disease diagnosis, they can link symptoms to likely conditions and update those links as more information arrives.

Deep learning, although more focused on neural patterns, still relies on probability. AI models often don’t just say, “That’s a dog.” They say, “There’s an 85% chance that’s a dog.” This helps with confidence scoring, risk management, and even safety decisions in applications like autonomous driving.

In reinforcement learning, agents don’t operate with fixed instructions. They learn to maximize rewards through trial and error, often relying on probability distributions over actions. This allows them to weigh outcomes, explore alternatives, and adapt to new environments with flexibility.

Every prediction AI makes—whether about a customer’s preferences or a stock market shift—is based on a careful calculation of risk and likelihood. Without this structure, intelligent systems would be blind to uncertainty and frozen by indecision.

Probability allows AI to work in high-stakes, fast-moving settings. Self-driving cars constantly evaluate the likelihood that another vehicle will stop, that a pedestrian will cross, or that a signal will change. Based on those odds, they choose the safest action. These calculations happen every millisecond, blending speed with safety.

In recommendation systems like Netflix or Amazon, AI analyzes viewing or shopping history to assign probabilities to future interests. It doesn’t guess randomly—it ranks possibilities and offers those most likely to match user behavior.

Healthcare applications depend heavily on probabilistic models. Diagnostic tools assess test results and symptoms and then estimate the likelihood of a condition. This allows for quicker, more personalized treatment—even when data is incomplete.

In fraud detection, AI uses probabilities to flag transactions that seem unusual. It calculates how closely a given activity matches known fraud patterns. It might not accuse, but it raises a red flag when the probability passes a threshold.

Probabilistic reasoning is especially valuable because it is transparent. Unlike opaque decisions, probability-based systems can show confidence levels. This clarity is vital when AI is used in sensitive areas like law, finance, or medicine.

Probability is the core that powers AI, allowing it to make informed decisions despite uncertainty. It transforms raw data into predictions, updates beliefs in real time, and helps systems adapt to ever-changing environments. Whether in self-driving cars, medical diagnostics, or recommendation engines, AI's ability to reason probabilistically ensures smarter, more reliable outcomes. Without probability, AI wouldn't be able to function in the real world, where conditions are rarely perfect. It's this flexibility, grounded in mathematical reasoning, that truly makes AI intelligent and capable of navigating the complexities of our world.

To decide which of the shelf and custom-built machine learning models best fit your company, weigh their advantages and drawbacks

How AWS Braket makes quantum computing accessible through the cloud. This detailed guide explains how the platform works, its benefits, and how it helps users experiment with real quantum hardware and simulators

How the FILM model uses a scale-agnostic neural network to create high-quality slow-motion videos from ordinary footage. Learn how it works, its benefits, and its real-world applications

How next-generation technology is redefining NFL stadiums with AI-powered systems that improve crowd flow, enhance fan experience, and boost efficiency behind the scenes

How to get started with sentiment analysis on Twitter. This beginner-friendly guide walks you through collecting tweets, analyzing sentiment, and turning social data into insight

Fastai provides strong tools, simple programming, and an interesting community to empower everyone to access deep learning

Natural Language Processing Succinctly and Deep Learning for NLP and Speech Recognition are the best books to master NLP

Nvidia Acquires Israeli AI Startup for $700M to expand its AI capabil-ities and integrate advanced optimization software into its platforms. Learn how this move impacts Nvidia’s strategy and the Israeli tech ecosystem

AI in Agriculture is revolutionizing farming with advanced crop monitoring and yield prediction tools, helping farmers improve productivity and sustainability

Knowledge representation in AI helps machines reason and act intelligently by organizing information in structured formats. Understand how it works in real-world systems

Generate your OpenAI API key, add credits, and unlock access to powerful AI tools for your apps and projects today.

Understand how transformers and attention mechanisms power today’s AI. Learn how self-attention and transformer architecture are shaping large language models