Twitter is a strange kind of public diary. People pour out thoughts, frustrations, jokes, outrage, and joy in real time. For businesses, researchers, and developers, it's an open window into what people care about. That's where sentiment analysis steps in. It's the process of figuring out whether a piece of text expresses a positive, negative, or neutral feeling. When applied to Twitter, this can reveal how people feel about a product, event, brand, or issue minute by minute.

While the concept might seem technical at first, getting started with sentiment analysis on Twitter is more accessible than you might expect. With the right tools, a bit of curiosity, and an understanding of how to handle the data, you can start making sense of online opinions.

Sentiment analysis isn't about reading minds. It's about analyzing patterns in language. Tweets, being short and often filled with slang, emojis, sarcasm, and hashtags, make things more complicated than analysing longer texts, such as emails or news articles. Still, with the right model, you can usually classify whether a tweet expresses something positive, negative, or neutral.

Twitter data is ideal for sentiment analysis because it’s continuous, public (most tweets are visible), and fast-moving. People talk about everything from movies to customer service to world events, all in one place. That makes Twitter useful for brand monitoring, political trend analysis, and understanding public mood.

The process typically starts by collecting tweets based on keywords, hashtags, or from specific accounts. You then clean the data—removing things like links, mentions, and non-text elements. Next comes the core step: feeding the cleaned tweets into a model trained to detect sentiment.

Models range from simple rule-based systems that rely on lists of positive and negative words to advanced deep learning models, such as BERT or RoBERTa, which consider context, sarcasm, and more subtle cues. While pre-built sentiment models exist, custom training can offer more accurate results, especially in industries with specific jargon or where sarcasm is common.

Before you can analyze anything, you need access to Twitter’s data. Twitter provides APIs that allow developers to collect tweets. The most commonly used is the Twitter API v2, which requires a developer account. Once approved, you can use tools like Tweepy (a Python library) to connect to the API and start pulling tweets that match your search criteria.

Say you want to analyze sentiment around a new phone launch. You’d use the API to search for tweets containing the product name, model number, or relevant hashtags. You can collect a set number of tweets or monitor them over time.

Once you have the tweets, the next step is to preprocess them. Tweets are messy. You'll often find URLs, hashtags, mentions, retweets, emojis, and inconsistent casing. All of these need to be cleaned or normalized. Common steps include:

Sometimes, tweets need more than mechanical cleanup. Slang, abbreviations, and regional expressions can confuse models. Domain-specific sentiment analysis may require building a custom dictionary or even fine-tuning a model to the specific tone and content of your subject area.

Not every sentiment model works well on Twitter. Tweets are short, often sarcastic, or full of abbreviations, which can trip up traditional models. If you're working on a small project, you might start with something simple like VADER (Valence Aware Dictionary and sEntiment Reasoner). It’s rule-based and tuned for social media, and it works right out of the box in Python. VADER even handles capitalization, punctuation, and emoticons fairly well.

If you want deeper accuracy and can afford the complexity, transformer-based models like BERT or its variants (like RoBERTa or DistilBERT) trained on social media data offer stronger performance. These models understand the context and tone better than simpler methods. You can use pre-trained versions from platforms like Hugging Face or fine-tune them with your own labelled tweet data if you have enough examples.

Training your model from scratch isn't usually necessary unless you're working in a highly specialized area. Fine-tuning a general model often provides better results without needing millions of training examples.

Once you apply a model to your dataset, you’ll get back sentiment labels—usually “positive”, “negative”, or “neutral”—along with a confidence score. These can then be aggregated to get a sense of public opinion on a topic over time.

Raw sentiment labels aren’t very useful without context. A key part of sentiment analysis on Twitter is transforming those labels into something that gives you a broader view. This might be visualizing how sentiment changes over days or weeks, especially around product launches, public statements, or breaking news.

You can chart sentiment over time to spot spikes—was there a surge in negativity after a poor customer service tweet went viral? Did positivity grow after a company made an announcement? Pairing sentiment with volume (how many tweets were posted) gives a fuller picture of engagement and tone.

Filtering by geography, language, or account type (influencers vs. general public) can also add layers of insight. Sometimes, the general sentiment is neutral, but a small group of influential users is highly negative or positive—that’s worth knowing.

For businesses, this can guide decisions on messaging, timing, and outreach. For researchers, it can provide a way to track public mood during events like elections or natural disasters. For developers, it’s a strong use case for learning natural language processing and working with real-world data.

Sentiment analysis on Twitter provides a clear view of public opinion in real time. It turns scattered tweets into structured insight, helping businesses, researchers, and developers understand reactions to events, products, or trends. With accessible tools and data, anyone can begin exploring it. While Twitter's language can be tricky, the effort pays off by connecting raw expression to measurable patterns. It’s a useful, relevant skill in today’s data-driven world.

Know the pros and cons of using JavaScript for machine learning, including key tools, benefits, and when it can work best

AI in wearable technology is changing the way people track their health. Learn how smart devices use AI for real-time health monitoring, chronic care, and better wellness

Learn how to lock Excel cells, protect formulas, and control access to ensure your data stays accurate and secure.

Explore the role of probability in AI and how it enables intelligent decision-making in uncertain environments. Learn how probabilistic models drive core AI functions

The development of chatbots throughout 2025 will lead to emerging cybersecurity threats that they must confront.

Know how to produce synthetic data for deep learning, conserve resources, and improve model accuracy by applying many methods

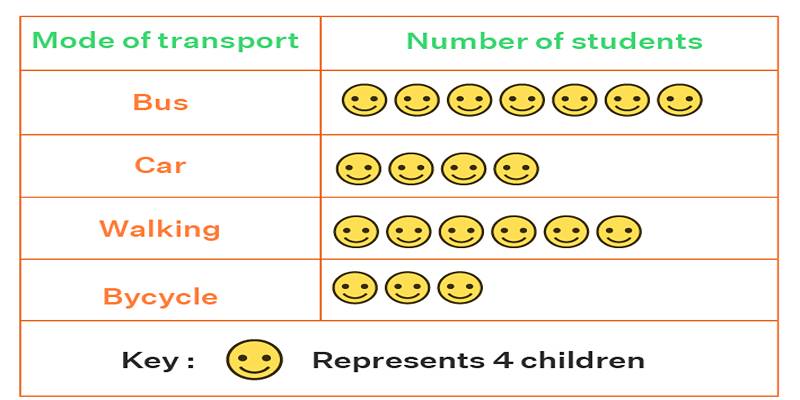

Learn what a pictogram graph is, how it's used, and why it's great for data visualization. Explore tips, examples, and benefits.

To decide which of the shelf and custom-built machine learning models best fit your company, weigh their advantages and drawbacks

The Black Box Problem in AI highlights the difficulty of understanding AI decisions. Learn why transparency matters, how it affects trust, and what methods are used to make AI systems more explainable

Create profoundly relevant, highly engaging material using AI and psychographics that drives outcomes and increases participation

AI for Accessibility is transforming daily life by Assisting People with Disabilities through smart tools, voice assistants, and innovative solutions that promote independence and inclusion

A recent study reveals OpenAI’s new model producing responses rated as more human than humans. Learn how it challenges our ideas about communication and authenticity